Throughout the project I used the Double Diamond methodology but we, as a team, decided on using Design Thinking in the pre-development phase to map out what the first priorities would be in our journey of developing the experience.

Empathize:

Objective: Understand the needs of the client and the target audience.

Define:

Objective: Define the goals for the project.

Ideate:

Objective: Explore a wide variety of ideas and solutions for the AR installation.

Prototype:

Objective: Create the different prototypes for the AR installation.

Test:

Objective: Test prototypes and gather feedback

You can find my learning outcomes by clicking here.

In my opinion, I more than realized my learning outcomes. I managed to create a complete Augmented Reality (AR) experience from the ground up in the eighteen weeks we had, which in most cases was more than I had planned to do in my learning outcomes.

A detailed view of my learning outcomes and how I completed them:

During the development of the game, I learned more about how to accommodate for an user in AR. Most of this was through testing myself but also through reading up on studies and watching videos on the Internet. This enabled me to make appropriate decisions on certain topics we came across during the development. Most of these were about how we would hint the player about a possible interaction.

Other times it became clear to me during testing that scale in AR is really quite important and can be tricky sometimes. That is why, in the first few weeks, I set up an easy method to change the scale of objects so that we could more easily iterate and define what scales to use in the final product.

The main deal for this learning outcome was dependent on providing a detailed document outlining the various component, while I have not done that, I did produce a number of prototypes that perfectly explain how this system works.

I decided against writing up a detailed document as I had already documented the technicalities in internal documentation within our Notion space, and so felt that it was easier to show rather than tell. That is why, in the course of one month, I made three ‘release’ prototypes on our GitHub. You can find them here. These prototypes all revolve around demonstrating the capabilities of the system in terms of tracking, live transforming and more.

Next to that, I learned a lot about developing for a XR application. This was the first time I had worked with technology like aforementioned, which was why it seemed daunting on beforehand but in hindsight it was not that big of a struggle.

This outcome was definitely completed, although not in the way I had planned. Instead I developed multiple useful prototypes which I also put out well within the planned first three months. Since then, my work has proven to be a solid foundation for the rest of the project.

When originally setting this outcome, I was under the impression that I would only work on small interactions with placed objects like our client mentioned. These would include clicking bushes to see butterflies and other small things like that. However, after the second week our client had decided to relieve the second studio (originally responsible for interactions) of their duties and instead have me developing those too. This meant that my learning outcome did not necessarily change but it did encompass more than it would have previously.

In the first five months of this project I developed all interactions currently in the game. These include collecting paper, collecting trash and throwing seeds. As well as light interactions such as selecting a flower pack and collecting both the bracelet and compass. I would say I have more than completed this outcome as I have done much more than anticipated at first.

In my opinion, I have definitely completed this outcome too. It was a rocky road and there were many difficulties along the way but I have always communicated as clear and effective as possible. The main issue was that our client was quite a difficult personality to handle and he unfortunately lacked the imagination to see what I was working on most of the time. Leading to me having to do extra work just so he could comprehend what I was working on, this also lead to me showing less and less the further the project advanced, as it was a time sink to show him the work and said work got more difficult later on too.

I have however, always kept him as up to date as possible while keeping the difficulties in mind. Next to that I always replied to his messages in rapid fashion and with a professional and understanding tone. The final project aligns with what was presented to us during the first week and I feel like we delivered a solution that fits what the client was looking for.

In regards to my learning outcomes, I would say that I am very proud of, and satisfied with my programming skills during this project. It was the first time I worked on a project as a programmer after shifting to said role coming from a designer role originally. This led to me starting off with questions and doubts about whether or not I would be able to tackle the issues that the project would have in store for me. In the end, I can definitely look back with pride of everything I accomplished. I was able to demonstrate my skills throughout the project as well as gaining vast new knowledge in regards to developing an extended reality application.

The major down-side throughout this whole process has been all the trouble we had to endure with our client. What started in the first week with inappropriate comments and jokes eventually led to a heated and tense meeting during which more inappropriate and hurtful comments were made that were unbased and unfair to us as a team and honestly, really unprofessional. In the paragraphs underneath, I will outline the behaviour our client has shown as well as the issues that made it difficult to work with him on this project.

In the first week of the project, we met Abner Preis, our client, and we immediately had some concerns. He did not seem to understand our study, neither what we as students did nor what roles we were used to working in. He also did not listen and would keep repeating himself until we nodded in agreement. Next to that, he made some comments with undertones of sexism and racism, while these were not bad enough to abandon the project right then and there they do provide valuable insight into the character of our client and the struggles we endured.

Over the next few weeks we sent him a list of times we would be available for a meeting. Most of these were on Monday, Tuesday or Thursday, around 11:00, 13:00 or 15:00. However, more than once this was completely disregarded and he insisted we would meet on Friday, even when we made it clear that it was not a working day for the project.

A few weeks into the project a team member was struggling with their mental health and therefore took some time off. We weren’t allowed to disclose this to our client as they were afraid of his possible reaction.

Later on in the project, after we had the mid-term presentation, Abner sent our guiding teacher an email. The email was, supposedly, of a bad tone and mentioned his sudden concerns about the progress we had made. This came out of nowhere and left us all stunned, there had been zero issues thus far and there was no reason to doubt if we would be able to make the deadline. This e-mail then led to a meeting during which the teachers together with Abner and Joris (team lead) decided to set up regular meetings every week.

The first two meetings that followed went fine but they did not provide meaningful insights or information whatsoever. The only thing that they brought was making our client feel more in control. The meetings were also quite tense at times as I myself raised some issues with him regarding voice overs and other decisions or promised that weren’t kept.

Then on the 10th of June we had another meeting. During this meeting Abner, raised his voice, yelled at us for not working hard enough, kept mentioning crunch and how everything was not good enough, directly compared team members to each other to shame one about their working speed, did not listen to a word we said and instead kept repeating himself until we would agree (same behaviour as in week one). During the meeting, most of our team members walked away to distance themselves from the heated and tense chat, where at least one of them mentioned that they felt unsafe and uncomfortable.

After that he would be on top of everything over the next few days, sending more than a few messages per day to see progress (even though we thoroughly explained to him that it would not be useful). In some of his comments he made sure to use a suggestive and negative tone (“So no progress.”) when I explained to him that we were still working on what we worked on an hour ago. All of this led to us terminating all contact effective immediately on the 13th of June after discussing with our guiding teacher.

In the end I am happy that we broke off the contact but I wish we had done it sooner. Within the first week, me and other team members had discussed among ourselves how Abner felt ingenuine and lacked understanding and listening skills. This all came to fruition later on but as far as I am concerned it should have never started in the first place.

From the first meeting onwards, it was clear that Abner did not have knowledge of our studies and what we did as students of CMGT. Next to that, he had never before worked with real developers that made everything themselves from scratch. This led to frustrations later on as he felt we were too slow, even when working at an adequate pace.

During the project he, more than once, suggested some weird game design ideas. I and others from the team tried to explain why it would not be helpful or even worth it to use time to test the idea. This however, was not possible and in most cases he was unable to accept no for an answer. This led to me adding certain useless things within the first weeks as well as trying things out only to scrap them later on. I believe that Abner did not understand how much time something could take and therefore thought it was easy to try something out every now and then.

Next to that, he has no knowledge about the technical/design side of things as all of his projects contain countless pngs that cannot be interacted with and instead all actual programming is done by another person. The only thing that he has done is placing images all over the screen and throwing in store bought assets. (Based on what he told us in the first week) All of this made it increasingly difficult to work with him as he simply couldn’t understand what we were working on and why it was taking so long, but he also did not try to understand. We have tried numerous times to explain to him what was happening and why it takes time in very simple language. Unfortunately, he was not able to pick up on this and instead kept repeating himself about wanting to see progress or wanting to see x implemented.

Very simply put, I would not participate in a project that is supposed to deliver a commercial product. I have seen the projects around me, within studio one, and it is clear that the pressure and tension in those projects is far from what we had to experience. From what I can tell this is because our client was dependent on us to deliver his product and he felt the need to put some pressure on us to see it resolved before his deadline. Whereas the other projects are all research-based, they provide their projects to their respective clients and there is no commercial goal behind it. Instead, the project is used to support their ongoing research.

Another thing would be to speak up about the behaviour of our client earlier. Which in turn would hopefully lead to an earlier termination of contact. That could have also saved us some massive issues and struggles.

During the project I carried out several different activities within the various steps of the design model we used.

This started by researching the issues at hand, the causes and what effects they brought with them. Most of my research went into relevant Unity tutorials as well as looking intro previous solutions I had made and conducting interviews with other students or teachers.

These resources were all combined by me in order to identify what the main issue I was trying to address was. Most of the time I focused on the biggest problem at hand, so that I could later on finish the smaller things in a rapid tempo. The core problems at the start of the project were all related to interaction and how we would make it so that seven year old kids would be able to use our solution.

These problems were then iterated on by developing a few solutions, would we want the kids to click to collect garbage or should standing close enough pick it up? Do we want to show them their progress or would they understand and be able to spot all of it? This continued for more than the aforementioned problems and turned out to be a prevalent strategy I used throughout the whole project.

After deciding and developing a final solution I would then (in most cases) present it to our client through either a video, gif, image or .apk build. This was usually enough for him to understand and provide feedback for, however in some cases I kept the deliverable internally and only discussed amongst the team to then restart the cycle and iterate until I had something I could show to our client.

Most of the knowledge I gathered was to do with simply developing an XR application. I had never done this before and was astounded by how easy it was to start a project like this for the first time. Unity really enables developers to do so much more with little effort nowadays.

Most of the experience I gained was centered around user experience in AR. As testing our application provided new and necessary insights into how an app like ours could be used, so too did it provide me with knowledge about practices I’d rather not repeat. In my case, I used image tracking to create our application and would quickly notice that there were too many calls made which could quickly tank the performance, this was even without actual art implemented. Next to that, it became clear how UI that is constantly on screen can hurt immersiveness quite a lot. Which is definitely something I will take with me if I would every work on immersive technologies again.

As mentioned before, most of the knowledge was gathered from watching tutorials or other videos around the subject. I have also read some interesting articles and papers about augmented reality. As well as talking to other students and teachers to identify key points and pick their brains. Next to that, much of my conclusions came from observations I made myself throughout the whole process and through the experience I have with playing games that make use of extended reality technologies.

The learning activities that seem most beneficial to my search for a graduation job would be the programming skills that I gained over the course of the whole project. As it was the first time that I acted as a programmer instead of a designer in this study, it has also been the first time that I have been programming for such a long time. I have learned a lot of things and got to experience being a solo developer in a multi-disciplinary team. This has provided me with valuable experience and knowledge that would hopefully make it easier for me to find a graduation job as a technical game designer, which is the role I have been aiming for these past couple of years. Combining my previously gained skills and experience on the design side of things together with the newly gained programming skills and experience would hopefully make things easier for me when I try to land such a position.

In the same manner that my learning activities contributed to the graduation job I hope to land, so too did they contribute to my desired profile as a CMGT professional. As mentioned before I would like to find a job in a technical game designer role and having had this experience I am much more comfortable with the mix of designing and programming as well as being much more confident in my skills in both field.

I will approach this based on the goals the client set in the first few weeks. Not the goals he suddenly had towards the end of the project.

The client had the following goals: creating something new together, getting out of our comfort zone to try new things and (hopefully) finishing the project together.

If I then take my own goals of developing an AR application with interaction, solid UX and effective communication with the client I can confidently say that I achieved the client’s goals through the completion of my own. I went further out of my comfort zone than I have ever been during my studies, as I had never done programming instead of designing in a project before. Neither have I worked with extended reality applications and their development. Next to that we have definitely created a unique, new experience and we came together as a team to do so. The project has also been finished, although be it in the last week, and has been handed over to an associate of our client. Based on all aforementioned, I have definitely balanced and achieved the goals set out by the client as well as my own personal outcomes.

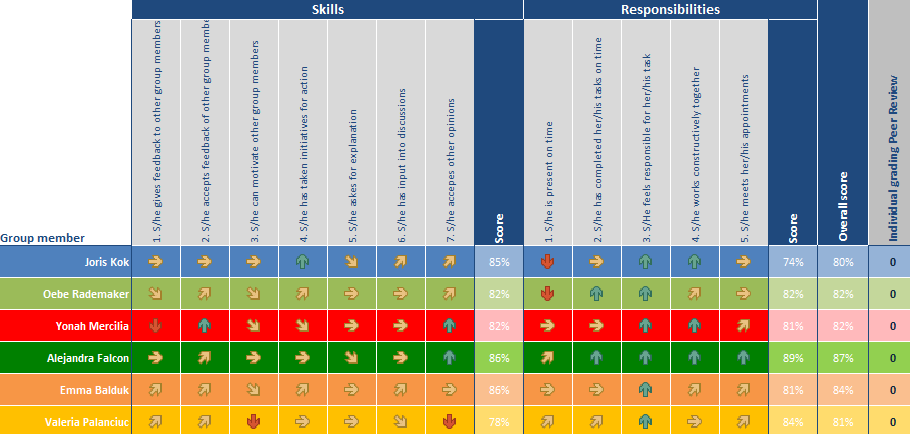

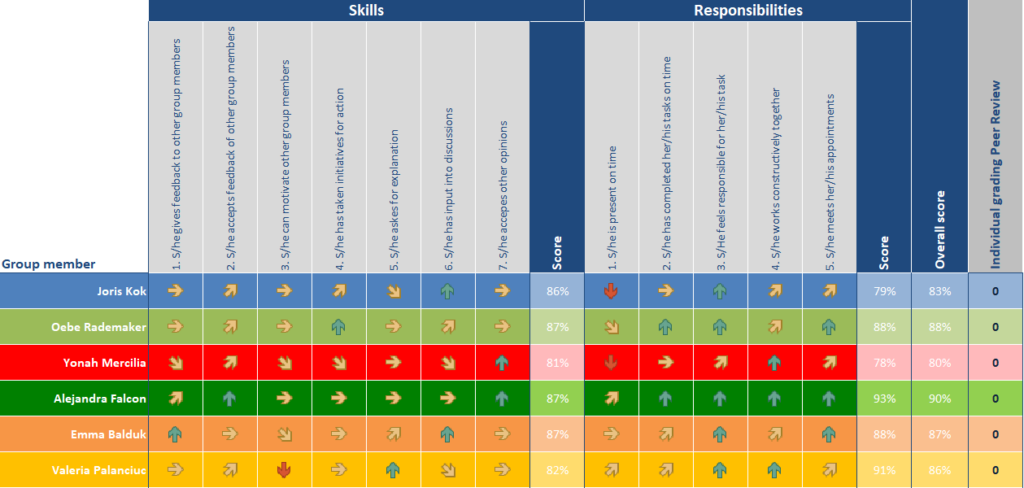

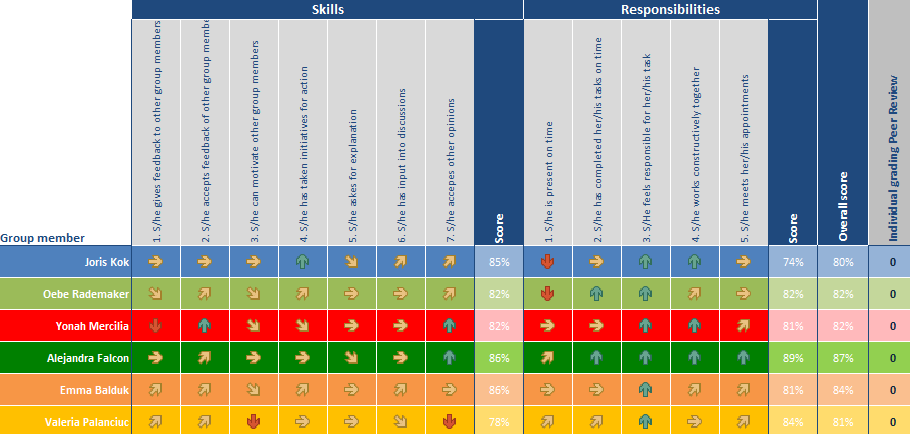

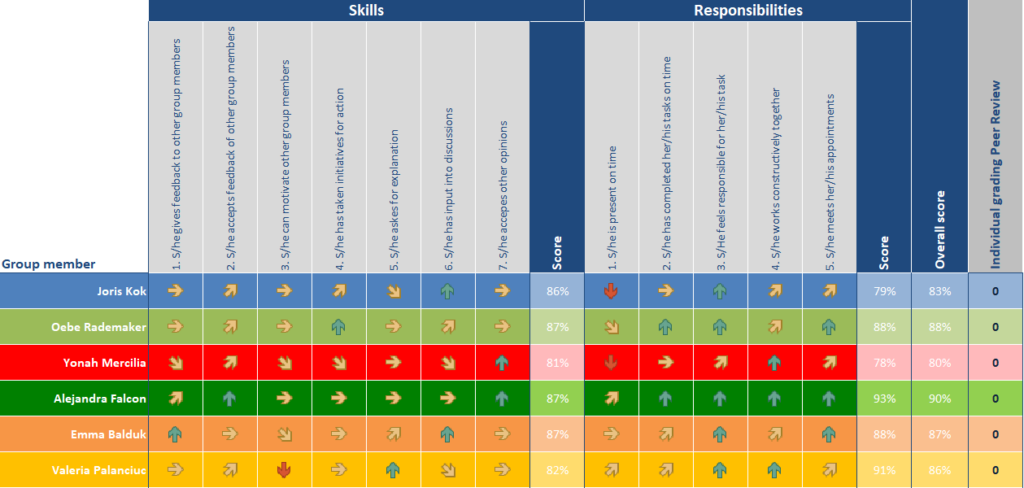

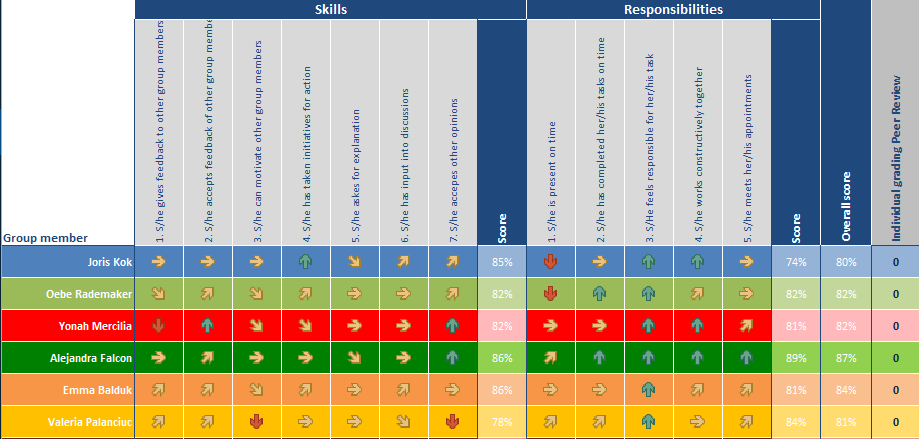

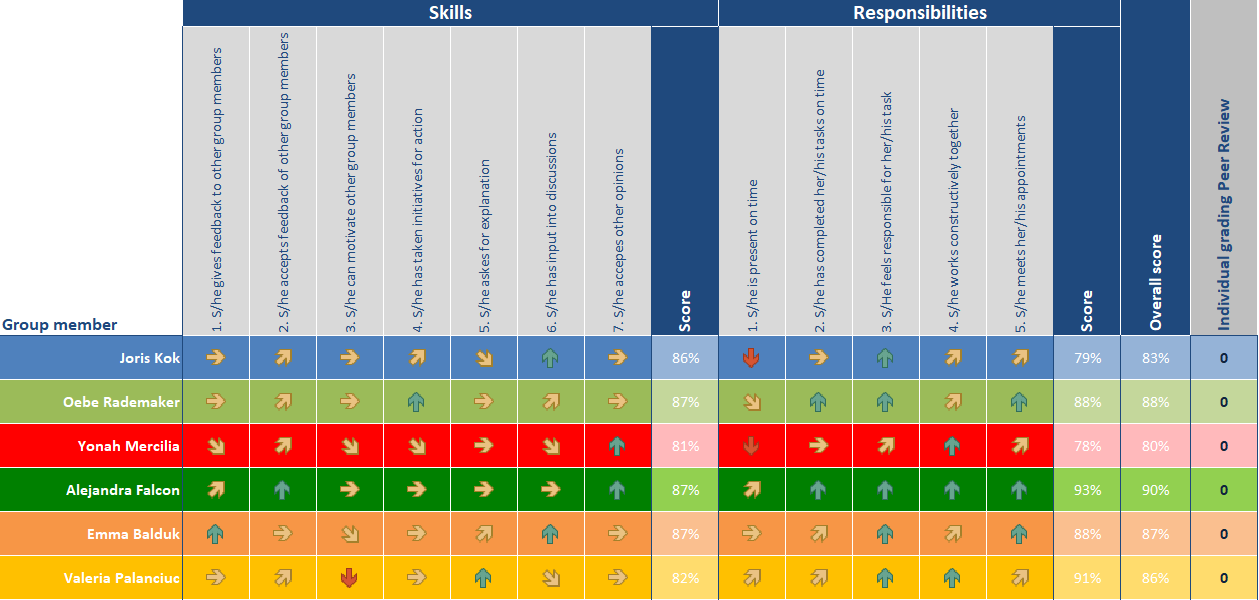

Reflecting on the filled in peer review I can only be happy with the results. I have scored quite high, second out of six, and have shown that I am a hard-working member of the team who accepts feedback and provides valuable input into discussions. Next to that, I have taken initiate for action and have completed all tasks on time while showing that I feel responsible for them too.

I am really satisfied with the progress I made from the first peer review at mid-term to the final peer review in the last week. I improved on providing feedback to group members, motivating other members, taking initiative for action, providing input into discussions, my punctuality and finally meeting my appointments. The only thing that saw a decrease was accepting other opinions, which I can kind of understand during the latter parts of the project as I decided to push through some things as I felt we would not make the deadline otherwise. This of course comes with some discomfort as I make a decision for everyone as I am responsible for the final implementation.

The major negative part in the peer review would be my punctuality which actually has seen an increase from the peer review during the mid-term and I think I have to agree with my fellow team members on this one. I have not always been on time and have been working from home quite often so I think it is fair that that would be my lowest aspect but I would not have agreed with the score I received the first time around.

Throughout the development of our experience I have noticed that I am good in pushing through and persevering even when I do not succeed the first few times. Next to that, I have come up with creative solutions for problems more than once and I really feel that that is one of my strengths. I have also shown that I can stand up for myself and be responsible for my work as a solo developer.

Weaker aspects would include losing sight of the project at times, I was not always completely up to date to what my tasks would be and what I could work on next. Another thing is the punctuality, which I feel like won’t really be an issue if I got to work in the industry as that is more of an issue that comes from motivation around this specific project as well as having the freedom to create your own working schedule at some points.

In summary, I feel like I would make a solid professional in the gaming industry not only because of my perseverance but also because I can come up with creative and effective solutions and have shown that I can work effectively in a multidisciplinary team as well as having shown the skills necessary throughout my portfolio item(s) and blog posts.

Online portfolio item: https://modderjoch.nl/augmented-reality-experience-edens-golden-rule/

Online report: https://www.modderjoch.nl/imts (password is “imts2024”)

From February until the end of June 2024, I worked on developing an Augmented Reality (AR) experience designed for the JCK in Amsterdam.

The goal of the project was to create a new take on the previously made Eden’s and the Golden Rule VR installation. This ‘Golden Rule’ is as follows:

“In everything, do to others what you would have them do to you.”

Gospel of Matthew (7:12)

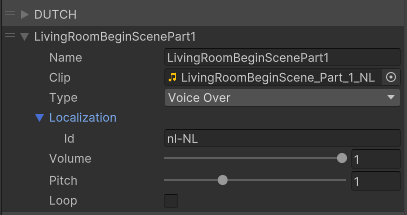

During the project I was part of a team made up of three artists and two designers as well as one developer (me). I was responsible for everything in regards to programming, from implementing the voice overs to implementing a game-wide system to keep track of scenes and advance through different phases. Next to that I have developed several smaller systems to solve more specific problems we encountered during the development.

My first few weeks revolved around getting to know AR and the inner-workings within Unity regarding Extended Reality technologies. I had never worked with anything like it before and started learning from the first second.

After getting to know the basics, I developed a few iterations of a simple prototype. Often showing another simple addition like changeable scaling of models and constant animation. These prototypes all used image tracking as a base for the AR. This was included in the Unity AR Foundation package but I customised to my liking to account for more specific features, like a scene management system later on.

Some of the prototypes in question:

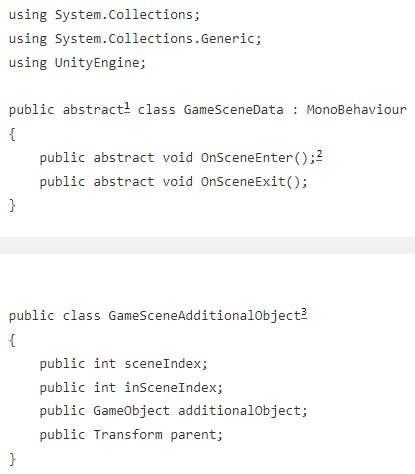

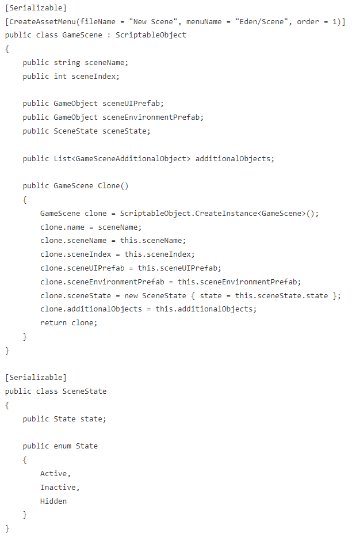

To allow for a scalable and flexible solution regarding scene setup, I decided to use scriptable objects. This made it so that I could easily store data per scene, which could be accessed and modified in a simple manner too. These could hold the necessary references, scene index, environment prefab, etc.

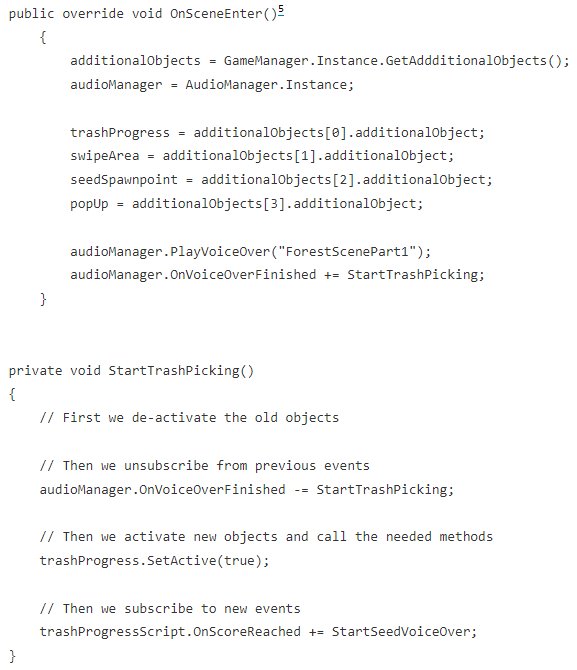

Next to that, I worked with an abstract class structure for the scene specific scripts. This made it so I could easily call an OnSceneEnter() method per scene if another overarching manager script detected it as the currently indexed scene that should be activated. After doing so, I used subscription and unsubscription throughout the scene specific scripts to accommodate for multiple voice overs that depended on other parts, using events and listeners through advance through the scene.

Having said up the whole project this way I then progressed through the first scene I worked on, eventually allowing me to use this setup to easily and efficiently create the other scenes too as I had already done it once. I had also created an Interactable class, that had an event which would be called upon pickup and allowed for easy addition of new interactables.

Throughout the whole development, we also encountered some issues. A software called Rive (used for vector animations) did not work with our Unity version and was therefor abandoned. However, we still needed the animations so I wrote a simple script to change images at x rate per frame. Another issue was that we initially planned on 3D modelling all characters but, due to time constraints, we decided against it and went back to 2D instead. This led to us not using mocap animations and I once again had to come up with a creative solution. I set up a system to change the image of a character after x seconds to create the illusion of animation in a sort of ‘visual novel’ style.

For the next few scenes I had to implement some new interactions, collecting paper that flew everywhere, gathering ‘collectables’ like a bracelet and compass and more.

And finally, combining everything previously shown and some other additions I had no other footage of. The full playthrough.

Note: there were still some inconsistencies when recording this but it has all been ironed out now.

To set out an accurate cost calculation we researched average wages, software license costs and discussed with our client.

In the end we are looking at several types of costs:

All of these posts combined with their estimated costs would then result in the table below. It is truly fascinating to see what a simple semester long project like this already costs. Especially on the wage side of things, seeing as that is the highest cost by far it seems beneficial to do a project in this way.

| Item | Cost |

|---|---|

| Junior development team of 6 working 480 hours | 480 hours * €22,5 * 6 people = €64,800 |

| AR-marker pillars | €5000 |

| Hardware (tablet) rental 1 year | €39 * 12 = 468 |

| Software licenses | €5571 |

| Music licenses | €1500 |

| Voice acting | €3500 |

| Script writing & QA | €3500 |

| Translations | €1000 |

| TOTAL | €85.339 |

JUNE 24 → JUNE 27 – 2024

During the meeting with Frank, we discussed what else he wanted me to add this week and based off of that I implemented a few fixes.

One of the main fixes revolved around preventing a game-breaking issue where the player could not proceed in scene three after the game had been reset.

My solution was to incorporate the Exit state Unity provides in it’s animation. With using this and also saving the original position of flower packs, I was able to circumvent the issue.

I created another release on our GitHub that includes these changes and small fixes. I also sent Frank this newly updated version.

The research I did this week was from conversing with Frank and figuring out what I could still add to the project.

My final week was a good one, having met Frank Bonsma I thought it would have been good for us and the project if we had communicated with our client through him instead. He seemed to understand what I was talking about, he valued me and showed interest and was overall a very relaxing presence.

I was really happy with the last few fixes I got to implement this week and especially the pace I could do it at. It only took me a few hours to implement everything. The rest of the week was spent on rewriting my blogs, collecting images and videos for the presentation and preparing for the deadline.

More about the general process, project and client communication as well as learning goals can be found in my personal reflection document. Available on the IMTS page.

public void ResetToStartingPosition()1

{

rectTransform.anchoredPosition = startingPosition;

}if(GameManager.Instance.CurrentSceneIndex <= 4)2

{

if (GameManager.Instance.CurrentSceneIndex < 4)

{

isGameOver = false;

}

if (isGameOver)

{

return;

}

else if(GameManager.Instance.CurrentSceneIndex < 4)

{

isGameOver = false;

}

}JUNE 17 → JUNE 20 – 2024

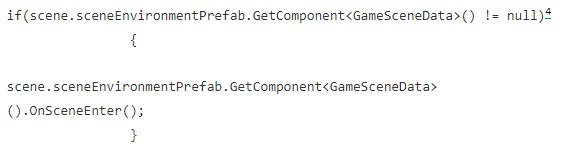

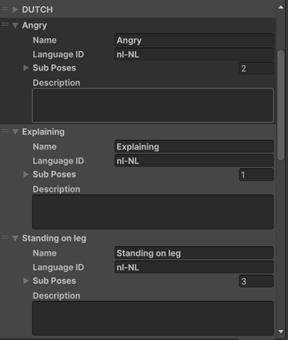

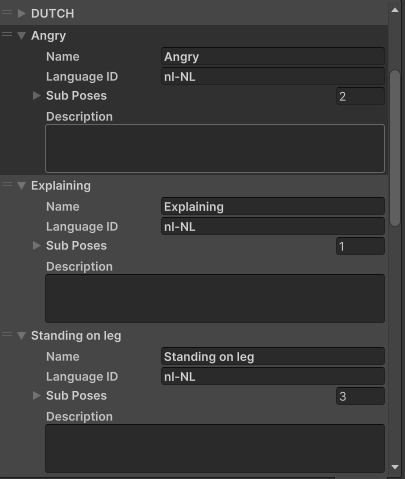

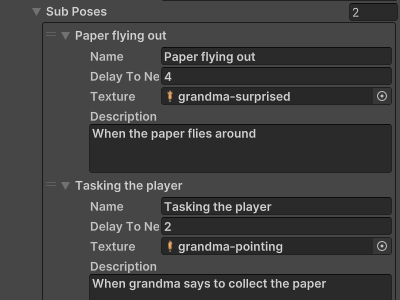

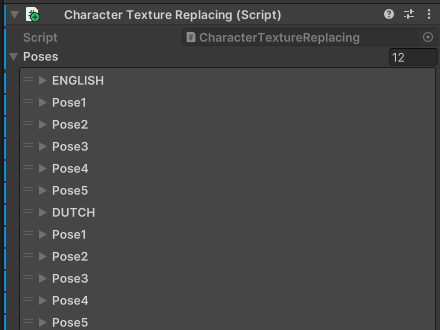

My development this week was mostly creating content again, which makes sense seeing it is the end of the dev cycle. However I did get to brainstorm about one new issue, the multiple language support for the animation frames.

To figure out the best way of tackling this issue I decided to look through my existing scripts and observe how I handled this issue previously. This ultimately lead me to my final solution.

The solution was not that difficult in the end, I had to find a way to ‘bundle’ a language identifier to a set of frames.

To do this, I simply added a string to the CharacterPose class. This string would then be added to the name at Runtime due to the Awake() function. After this I could simply call script.SetPose(“Pose1” + LocalizationSettings.SelectedLocale.Formatter); to get the correct pose corresponding to the currently selected language. This also works when changing the language during the game and provides an elegant and flexible solution to the problem.

As communication with our client was terminated I did not have any deliverables for him this week, I did however keep my team members updated by showing them gifs/videos of my progress.

I also made a release on our GitHub to prepare for the final handover. This was the 1.0.0 version.

Next week will be the final week of this project. I will hand over the .apk, marker images and source code to an associate of our client. After which I will use the remainder of the week to add any changes I still have the time for as well as working on all final deliverables for IMT&S.

The research I did this week was based around researching my own code to find what solution I had used before and which was best to apply to the problem at hand.

The week was very peaceful compared to previous weeks, most of this was due to the fact we did not have the client breathing down our necks. This led to a more comfortable and relaxed feeling among our team and also helped me to tackle more issues this week.

Next to that, I am quite happy with how I handled some problems that arose this week and I am looking forward to the final week.

protected void Awake()

{

for (int i = 0; i < poses.Count; i++)

{

poses[i].name = poses[i].name + poses[i].languageID;1

}

}

public void SetPose(string pose)

{

textures.Clear();

}

public class CharacterPose

{

public string name;

public string languageID;2

public List<CharacterSubPose> subPoses;

[TextArea(3, 10)]

public string description;

}JUNE 10 → JUNE 13 – 2024

Most of this week was spent creating content using the tools I had setup previously. This meant that there were no real new developments persé, but instead I finally profited from the work I had put in weeks ago.

My main points this week were adding solid-looking animations as well as properly implementing the book and other interactions.

I added the wolf head rotation quite quickly as Emma, the artist responsible for the wolf, had set up the rig in an easy to understand way. This meant I could simply write a new script that would rotate the right bone towards the camera.

To add the grandma’s animation, I simply used the tool I had written before. The only time-costly part was having to listen to the voice over multiple times to get the timing right.

The compass interaction was a simple reuse of the bracelet interaction, which I had set up in a flexible manner to accommodate other interactables like the compass too.

Finally the book interaction was not too hard to implement either, Alejandra had done a superb job on the animation and all I had to do was place the pieces of paper in the book in a correct way.

As communication with our client was terminated I did not have any deliverables this week, I did however keep my team members updated by showing them gifs/videos of my progress.

Next week I’ll work on implementing a major UI object made by Joris as well as all other animations for the characters.

No real research was conducted this week.

As the meeting on Monday had been quite a difficult one, I and other members of the team had lost most motivation. This led to this week being more difficult than others but it did unite us more than we had been before.

As far as implementing content goes, I am still very satisfied with everything I got done this week. Especially knowing about the mess our client caused. In the face of adversity we still pushed through and kept working at a steady pace.

Another big plus this week was that I finally was able to see how valuable it is to set up a project like this in a flexible and scalable manner. I was able to do more in less time because I used systems I created a few weeks ago. This felt very rewarding.

protected void FixedUpdate()1

{

// Determine which direction to rotate towards

Vector3 targetDirection = Camera.main.transform.position - head.position;

// The step size is equal to speed times frame time.

float singleStep = speed * Time.deltaTime;

// Rotate the forward vector towards the target direction by one step

Vector3 newDirection = Vector3.RotateTowards(head.forward, targetDirection, singleStep, 0.0f);

// Draw a ray pointing at our target

Debug.DrawRay(head.position, newDirection, Color.red);

// Calculate a rotation a step closer to the target and apply rotation to this object

head.rotation = Quaternion.LookRotation(newDirection);

// Clamp the rotation to prevent full 360-degree rotation and excessive looking up/down

float minHorizontalAngle = -140f; // minimum angle to rotate left from the initial forward direction

float maxHorizontalAngle = 0f; // maximum angle to rotate right from the initial forward direction

float minVerticalAngle = 30f; // minimum angle to look down

float maxVerticalAngle = 100f; // maximum angle to look up

head.rotation = ClampRotation(head.rotation, minHorizontalAngle, maxHorizontalAngle, minVerticalAngle, maxVerticalAngle);

}JUNE 3 → JUNE 6 – 2024

My development this week was based around tying up loose ends and providing the player with a way to reset their game as well as upgrading the visuals in some parts.

The main issue I had to tackle in regards to the game reset was getting it working in my existing setup.

Another problem I was tasked with fixing was making sure the flower pack looked good, I decided to use code to do the animation instead as that was more flexible in my opinion.

I eventually managed to do so by separating my StartGame() method I used in the GameManager and allowing it to be called from other scripts. This way I could control when to start a new game and take the appropriate measures.

To fix the problem of having to animate the packs moving to the middle in an efficient manner I decided to use a custom script. This would make it easier as all packs can use the same script instead of having to animate them individually. (see code below)

These changed weren’t communicated externally but they were shown to my team members for feedback.

Next week I’ll work on improving the game resetting, implementing the final interactables for the other scenes, implementing the art for the final scenes and (depending on the client) importing the Dutch voice overs.

No research worth mentioning was conducted this week.

The communication with the client got more tense this week, which was not of benefit to any of us and neither to the project.

Other than that I am happy with the additions I was able to make. The flower pack interaction really improves the whole feel of the forest scene and the game reset is a valuable addition and definitely something I should have thought of sooner myself.

public event Action OnMiddleReached;1

protected void Update()

{

if(Input.GetKeyDown(KeyCode.Alpha8))

{

StartMoveToMiddle();

}

if (moveToMiddle)2

{

rectTransform.anchoredPosition = Vector2.Lerp(rectTransform.anchoredPosition, targetPosition, speed * Time.deltaTime);

if (Vector2.Distance(rectTransform.anchoredPosition, targetPosition) < 0.1f)

{

rectTransform.anchoredPosition = targetPosition;

moveToMiddle = false;

}

}

} /// <summary>

/// IEnumerator to reset the game and instantiate new environments.

/// </summary>

/// <returns></returns>

public IEnumerator ResetGame()3

{

trashProgress.ResetScore();

yield return new WaitUntil(imageTracking.EnvironmentsAreRemoved);

imageTracking.RemoveEnvironments(false);

SetSpawnablePrefabs();

List<GameObject> newPrefabs = imageTracking.SetSpawnablePrefabs();

GameManager.Instance.modifiableScenes[i].sceneEnvironmentPrefab = newPrefabs[i];

}

startSceneIndex = 0;

SetActiveScene(startSceneIndex);

AudioManager.Instance.StopAllVoiceOvers();

DeactivateAdditionalObjects();

mainMenuUI.SetActive(true);

}MAY 27 → MAY 30 – 2024

In terms of development, the main issue this week was to be able to reset the game, as well as providing a way to collect items.

I consulted other team members about how they would handle the collection as well as researching other games. For the reset there was no real research to be done other than deep-diving back into my own code to identify areas where issues could arise as well as trying to find a way to add the feature in.

The main issue for the collectable is that it could be difficult to instantiate it in the world itself. As well as it not being easiliy visible for the player.

As for the reset, the main problem would be that my current structure did not perse support a player exiting out of a scene.

My solution for the collectable issue was to parent the object to an empty object in front of the camera. That way the collectable would always be visible as well as being easy to access in terms of code. Next to that I re-used my ‘Interactable’ script that would work as a base for any other collectable object. I then added a ‘Bracelet’ script which inherits from the former.

The reset was more difficult to develop a solution for, eventually I managed to close off most things that were depending on one another, however even now there was still the issue of voice overs continuing to play. The feature was postponed to next week.

I created another release on our GitHub that shows the complete playthrough but uses greyboxes for scenes one, four and five. This was on request of the client.

Next week I’ll work on the flower pack animation and interaction. I will also take another look at the game reset/pausing.

The research I did this week mainly came from conversing with my fellow team members to identify some areas for improvement and pick their brains about collectables.

There were some things about the project that were not so much fun anymore. Mainly our client and the communication with him, as it began to be more and more difficult and tense.

Next to that, I was not too happy with the way I set things up now that I had to figure out how to add a game reset within an existing framework that does not necessarily support it.

public class Bracelet : Interactable1

public void CollectBracelet()

{

AudioManager.Instance.Play("Paper1");2

OnBraceletCollected.Invoke();

}

}